Here is a test image to see if your computer and monitor are currently able to display subtle differences in colors near white. Click on it to see it full size. If you look at the image from an angle on an LCD monitor, it should clearly show horizontal bands that get progressively darker down the screen.

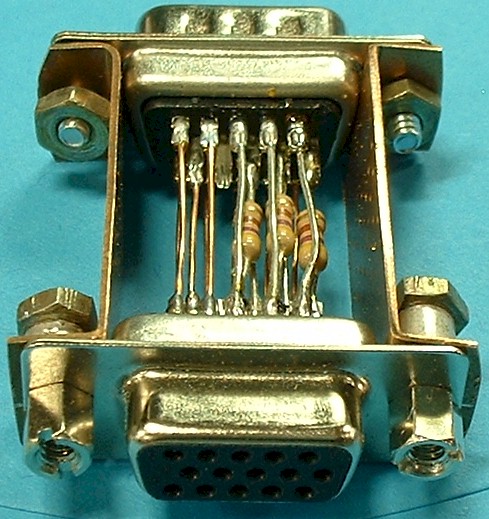

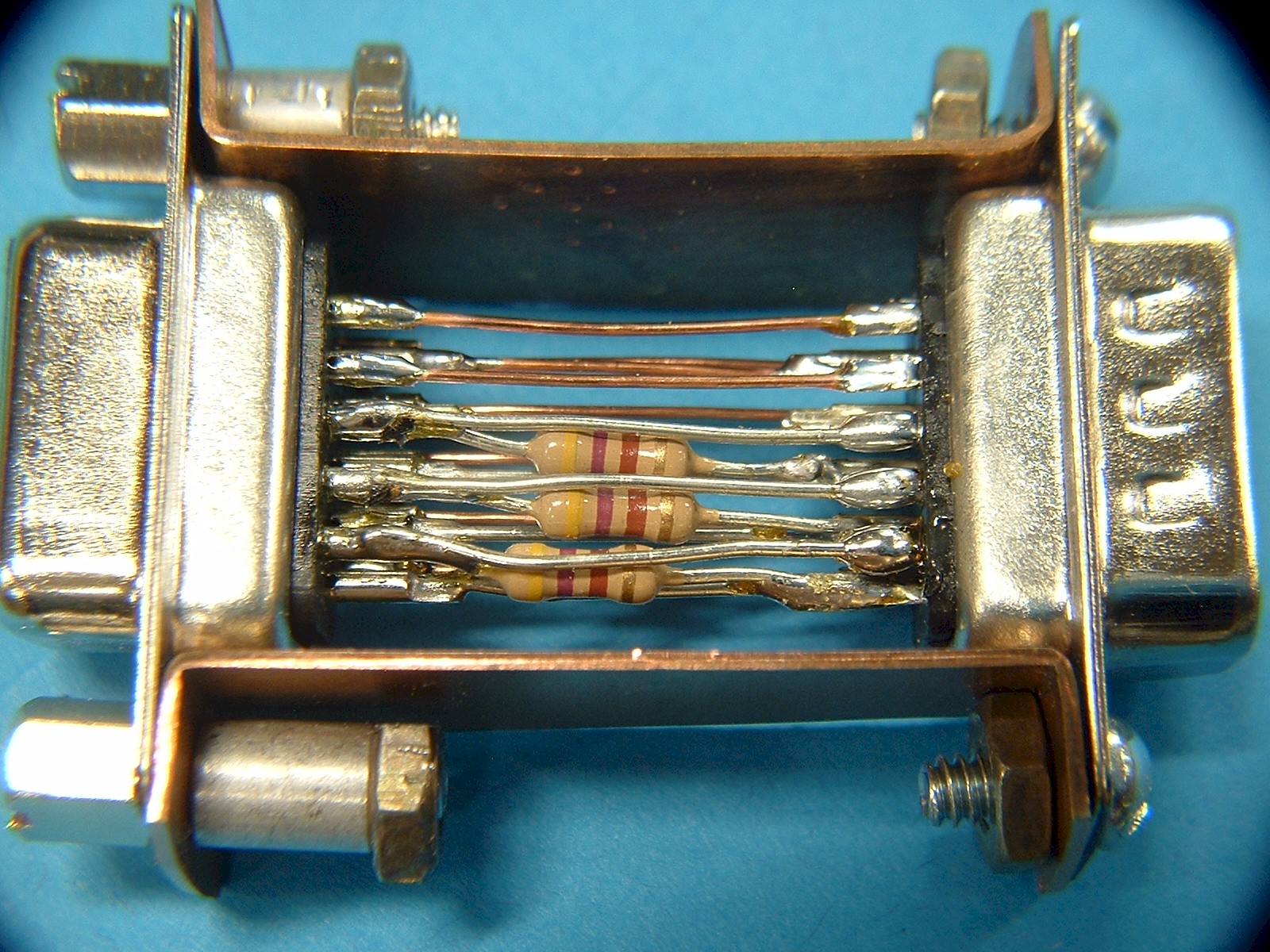

In order to reduce the analog signals such that the dynamic range is restored, an attenuator can be used in the video cable path. This is just a device to reduce the signals so that the maximum voltage is at .7v. A simple passive device can be made with 3 resistors, and a male and a female vga connector. The resistors should be as high of a resistance as possible, while still allowing the lightest shades of gray to be discernable. On my computer I had to use 470 ohm resistors. There is no fear of overloading the circuit because it is a low-impedance circuit relative to the resistor values. Typical output and input impedances are in the 50 to 75 ohm range. The 470 ohm resistors will not load it significantly.